Partial Randomization

So far, we have learned how to specify a causal graph for simulation and sample from that to synthesize a dataset. The question is what does it take to specify a graph? The answer is, every detail of that graph, down to the last coefficient of each equation. As soon as we do not specify a parameter or a coefficient, the whole process stops.

Next question is, how do we know the known parts of the graph? It is by some prior knowledge that we specifically specify those parts. For instance, we know about an Age variable that follows a normal distribution with known mean and standard deviation, and that it doesn’t have a parent within the simulation variables. From the evaluation point of view, the known parts are specified by the assumptions of the to-be-tested model: If you claim that your model performs well in linear settings, this is the aspect we know about the simulation study which is suitable for evaluating your model; all the rest (e.g. number of variables) shall not be fixed, or we have restricted the testing space of the model to somewhat more restricted than the promised space.

Finally, what to do when we hold partial information about a simulation setting? The answer is, we sample from a graph space of all possible simulation mechanisms that comply with our given information, yet vary across the undefined parts. As the PA-rtially R-andomized part of the PARCS name indicates, it provides a solution, compatible with the graph simulation workspace, for resolving this issue.

Imagine we want to simulate a scenario with two normally distributed (treatment) and one Bernoulli (outcome) random variables. Our goal is to test and empirically evaluate an algorithm that claims to estimate the causal effect of each treatment on the outcome when the treatments are independent, and the causal relations are linear.

Given this information:

The two treatment must have no edge, while both will have an edge to the outcome.

Only the identity edge function, and linear terms for the parameters are allowed.

One possible outline for such a scenario is:

A_1: normal(mu_=2, sigma_=1)

A_2: normal(mu_=3, sigma_=1)

Y: bernoulli(p_=A_1+2A_2), correction[]

# infer edges

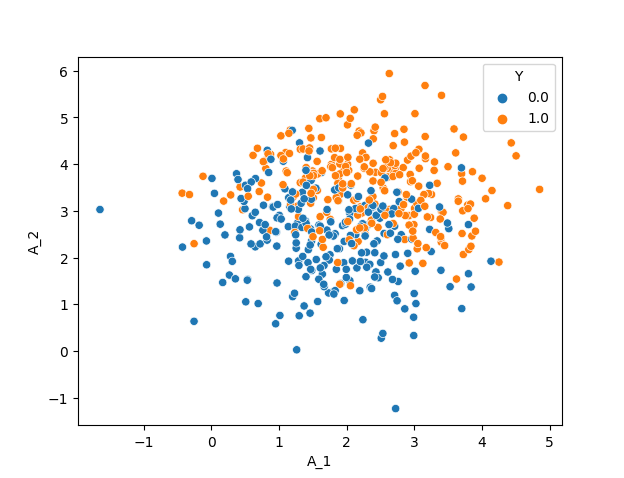

The resulting dataset with 500 datapoints is:

But this is only one out of many complying simulation settings for your problem.

Randomizing the Parameters

Now let’s have a look at the following outline:

A_1: normal(mu_=?, sigma_=?)

A_2: normal(mu_=?, sigma_=?)

Y: bernoulli(p_=?), correction[]

A_1->Y: identity()

A_2->Y: identity()

The question marks which has replaced the distributions’ parameters tell PARCS that we do not want to fix those parameters. Then PARCS samples from the space of possible parameters and each time, returns one specific graph. But sampling based on what? Based on a randomization guideline that we write as another outline:

nodes:

normal:

mu_: [ [f-range, -2, 2], 0, 0 ]

sigma_: [ [f-range, 1, 4], 0, 0]

bernoulli:

p_: [ [f-range, -1, 1], [f-range, -5, -3, 3, 5], 0]

In this guideline (which can be a dictionary, similar to graph outlines):

The node entry includes information about one normal distribution and one Bernoulli distribution. Each distribution, in return, holds information about their parameters.

The value of each parameter key is a list of 3 elements. This corresponds to bias, linear, and interaction factors. These elements are called directives, as they describe the search space for the coefficients of these factors.

The directives for the linear and interaction factors of

mu_andsigma_, as well as for the interaction factors ofp_is zero, meaning that these coefficients will always be zero when PARCS is sampling the coefficients.The rest of the directives start with

f-range. This tells the PARCS to sample a float value from the given range.[f-range, -2, 2]gives the \([-2, 2]\) range, and[f-range, -5, -3, 3, 5]gives \([-5, 3] \cup [3, 5]\). Other possible directive types arei-rangefor sampling from a discrete uniform, andchoicefrom a list of options that follows. See here for a detailed introduction of the guideline outline.

This outline will then be used to instantiate a Guideline object and randomize the Description using the .randomize_parameters() method:

1from pyparcs import Description, Graph, Guideline

2from matplotlib import pyplot as plt

3import seaborn as sns

4import numpy

5numpy.random.seed(42)

6

7guideline = Guideline(

8 {'nodes': {'normal': {'mu_': [['f-range', -2, 2], 0, 0],

9 'sigma_': [['f-range', 1, 4], 0, 0]},

10 'bernoulli': {'p_': [['f-range', -1, 1], ['f-range', -5, -3, 3, 5], 0]}}}

11)

12

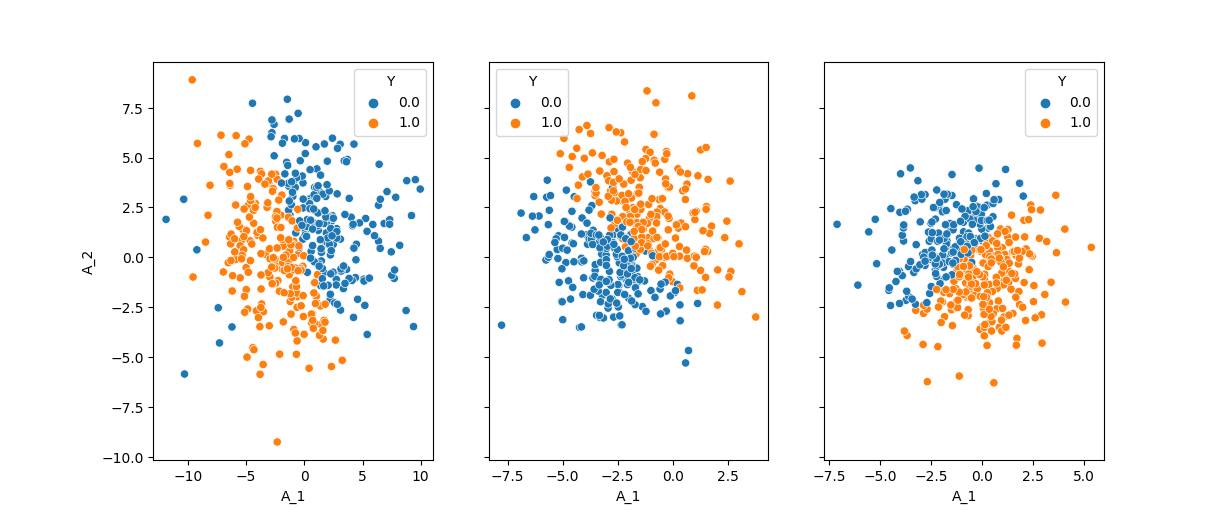

13f, axes = plt.subplots(1, 3, sharey=True)

14for i in range(3):

15 description = Description({'A_1': 'normal(mu_=?, sigma_=?)',

16 'A_2': 'normal(mu_=?, sigma_=?)',

17 'Y': 'bernoulli(p_=?), correction[target_mean=0.5]',

18 'A_1->Y': 'identity()', 'A_2->Y': 'identity()'})

19 description.randomize_parameters(guideline)

20 graph = Graph(description)

21 samples, _ = graph.sample(400)

22 sns.scatterplot(samples, x='A_1', y='A_2', hue='Y', ax=axes[i])

23

24plt.show()

Using this method, you can also tell PARCS to randomly select the distributions and edge functions as well. This is done using the random keyword, when PARCS chooses among the provided distributions and functions in the guideline:

1guideline = Guideline(

2 {'nodes': {'normal': {'mu_': [['f-range', -2, 2], 0, 0],

3 'sigma_': [['f-range', 1, 4], 0, 0]},

4 'bernoulli': {'p_': [['f-range', -1, 1], ['f-range', -5, -3, 3, 5], 0]},

5 'exponential': {'lambda_': [['f-range', -1, 1], ['f-range', -5, -3, 3, 5], 0]},

6 'poisson': {'lambda_': [['f-range', -1, 1], ['f-range', -5, -3, 3, 5], 0]}}

7 }

8)

9

10for i in range(4):

11 description = Description({'A_1': 'random',

12 'A_2': 'random',

13 'Y': 'bernoulli(p_=?), correction[target_mean=0.5]',

14 'A_1->Y': 'identity()', 'A_2->Y': 'identity()'})

15 description.randomize_parameters(guideline)

16 print(f'=== round {i} ===')

17 print(description.nodes['A_1']['output_distribution'],

18 description.nodes['A_2']['output_distribution'])

19# === round 0 ===

20# exponential exponential

21# === round 1 ===

22# poisson poisson

23# === round 2 ===

24# poisson normal

25# === round 3 ===

26# poisson exponential

Connection to a Subgraph

Assume the following structural equations model.

where \(Z\) and \(L\) are random vectors, \(A\) and \(B\) are a lower triangular coefficient matrices, and \(C\) is another arbitrary coefficient matrix. In this model, \(L\) and \(Z\) can be normal simulation graphs, while \(Z\) receives causal edges from \(L\) via the \(C\) matrix.

In PARCS, this setting is interpreted as connecting two descriptions, assuming that the flow of edge is one way from a parent to a child graph. This can be done via another randomization method, the .randomize_connection_to()

1from pyparcs import Description, Guideline

2import numpy

3numpy.random.seed(42)

4

5guideline = Guideline(

6 {'nodes': {'bernoulli': {'p_': [['f-range', -1, 1], ['f-range', -5, -3, 3, 5], 0]}},

7 'edges': {'identity': None},

8 'graph': {'density': 1}}

9)

10

11outline_L = {'L_1': 'normal(mu_=0, sigma_=1)', 'L_2': 'normal(mu_=L_1^2+L_1, sigma_=1)'}

12outline_Z = {'Z_1': 'bernoulli(p_=0.3)', 'Z_2': 'bernoulli(p_=0.5)'}

13

14description = Description(outline_L, infer_edges=True)

15description.randomize_connection_to(outline_Z, guideline, infer_edges=True)

16

17print(description.nodes.keys())

18# dict_keys(['L_1', 'L_2', 'Z_1', 'Z_2'])

19

20print(description.edges.keys())

21# dict_keys(['L_1->L_2', 'L_1->Z_1', 'L_2->Z_1', 'L_1->Z_2', 'L_2->Z_2'])

22

23print(description.nodes['Z_1']['dist_params_coefs']['p_'])

24# {'bias': 0.3, 'linear': [-4.11, -4.88], 'interactions': [0, 0, 0]}

In this code:

Line 15 randomizes a connection from the main description to a child outline. The passed argument in this line is clearly for parsing the child outline (the parent outline has already been parsed)

In the guideline dict (line 8) there is a graph key, including the information about density. The \([0, 1]\) range density directive, determines the sparsity of the connection edges. As we have chosen

'density':1, the randomization method makes all the possible edges, as shown in line 21In line 24, we have printed the info on Node

Z_1after randomization. The bias term for the node is 0.3, which follows the outline information. You can see, however, that two linear coefficients (forZ_1andZ_2) are non zero, but the interaction coefficients are. This follows the directives given in the guideline. but more importantly, you can see howrandomize_connection_to()method changes the parameter equations: In an additive fashion, it adds the new terms for the new parents to the existing equation. Thus, a limitation of this method is that no interaction terms will be added among existing and new parents.

In this process, we can prevent certain nodes in the parent description from having out-going edges to the child nodes. This is controlled by using a C tag, similar to the parameter randomization method (only the lines with the corresponding tags will be invoked):

L_2 only has out-going edges1outline_L = {'L_1': 'normal(mu_=0, sigma_=1)',

2 'L_2': 'normal(mu_=L_1^2+L_1, sigma_=1), tags[C1]'}

3outline_Z = {'Z_1': 'bernoulli(p_=0.3)', 'Z_2': 'bernoulli(p_=0.5)'}

4

5description = Description(outline_L, infer_edges=True)

6description.randomize_connection_to(outline_Z, guideline, infer_edges=True, tag='C1')

7

8print(description.edges.keys())

9# dict_keys(['L_1->L_2', 'L_2->Z_1', 'L_2->Z_2'])

Randomize connection method accepts other arguments to control the randomization process, e.g. to apply a custom mask to the parent-to-child adjacency matrix, or preventing certain parameters from being affected; all these you can find out in detail in the description class API doc.

A Random Graph from Scratch

What if we want to start with a completely random graph, which still follows the directives of a guideline? This can be done using the RandomDescription class:

1from pyparcs import RandomDescription, Guideline, Graph

2import numpy

3numpy.random.seed(42)

4

5guideline = Guideline(

6 {'nodes': {'bernoulli': {'p_': [['f-range', -1, 1], ['f-range', -5, -3, 3, 5], 0]},

7 'poisson': {'lambda_': [['f-range', 2, 4], ['f-range', 3, 5], 0]}},

8 'edges': {'identity': None},

9 'graph': {'density': ['f-range', 0.4, 1], 'num_nodes': ['i-range', 3, 10]}}

10)

11

12description = RandomDescription(guideline, node_prefix='X')

13graph = Graph(description)

14

15samples, _ = graph.sample(4)

16print(samples)

17# X_5 X_0 X_3 X_6 X_2 X_1 X_8 X_4 X_7

18# 0 0.0 1.0 3.0 2.0 0.0 1.0 1.0 0.0 2.0

19# 1 0.0 1.0 1.0 2.0 1.0 0.0 0.0 0.0 0.0

20# 2 1.0 0.0 1.0 0.0 1.0 0.0 0.0 1.0 0.0

21# 3 1.0 0.0 2.0 1.0 3.0 4.0 0.0 0.0 3.0

The graph key in the guidelines plays the main role in this instantiation. The num_nodes and density parameters specify the general specifications of the graph. When the nodes and edges are spawned, the distributions, functions and parameters will be randomized as before.